A Developer’s Journey - Choice and Challenges Between Golang Vs. Rust

Written by Devops Traveler Tales, 8 October 2024

Go and Rust are becoming prominent programming languages, each known for its unique strengths.

There’s no argument against the fact that both are excellent.

That is probably why discussions comparing Go and Rust frequently pop up, debating which one is superior. [1], [2]

In this post, rather than comparing and measuring, I want to walk through my personal experiences, explaining the decisions we made along the way.

Quick Intro

Golang and Rust

Before diving into our story, let's keep it short (super important) and briefly introduce Go and Rust

Go, often praised for its simplicity, is an easy-to-learn language especially for beginners. It features garbage collection (GC) for memory management and supports user-level threads called goroutines.

On the other hand, Rust prioritizes stability and performance. While it has more complex syntax, it uses an ownership model for memory management without GC and the ecosystem widely embraces Tokio(https://tokio.rs/), a runtime for user-level threads.

That’s all!🎉

Of course, there are many more differences and nuances, but this should be enough for understanding today’s story!

Meet the Characters in the story

- Negoguma: Our DevOps chapter lead, often named after foods for testing purposes. Also known for calming the occasional workplace chaos.

- RiHo: The backend chapter leader, curious about various technologies. He’s working on real-time game servers.

- Kururu: A DevOps engineer who started their career in Japan. Fluent in Japanese, sometimes surprising us with their native-level skills.

- Tales: That’s me! I’m a bit shy introducing myself, but I love infrastructure work.

The Story started from…

May 2023, we were in the midst of rebuilding a new chat service.

During load testing, RiHo mentioned that login times for mock users were taking around 300-500ms, way too slow. I, Tales, began investigating!

At first glance, nothing in the login logic that would cause such a delay. But after checking the test client’s requests, I discovered something unusual in RiHo’s test logic.

Each thread was registering users and then logging them in during the chat load tests. It turned out the login itself was only taking 20-30ms, but the registration process was eating up most of the latency.

At first glance, it seemed reasonable, but the registration was not necessary prat for this test. So I suggested to RiHo that we could handle the pre-registration to the setup phase [3], [4] instead, allowing us to focus on the chat load test during the actual test.

But wait—why was registration so slow?

The Mystery of Slow Registrations

The registration logic had become complex over time, and after digging through alone, I need Negoguma’s help, who had been with the company since its early days. Even Negoguma couldn’t find the reason quickly!

Together, we finally found the culprit: the scrypt function for password encryption was the cause of the problem!

In the past our service had chosen scrypt function for its strong security[5] , a slow but highly secure encryption function, with high parameter values to ensure security strength.

Additionally, our development servers had lower resource allocations than production, making everything even slower.

Now that we knew the cause, we were faced with a new problem—how do we handle this slow registration logic?

Although user registration on the production servers wasn’t as slow as what RiHo experienced during testing on the development servers, it still was not as fast as we would have liked. However the resources were excessively over-allocated.

Changing the registration on the authentication server would also mean refactoring the login flow and performing a full migration, which seemed like an overwhelming amount of work for me.

As, we were discussing it, ONE great idea suddenly struck me!!

Tales: : "What if we offload the scrypt process from the authentication server?"

After I brainstorming with Negoguma, we agreed that the nature of the scrypt task was fundamentally different from the other workloads of the authentication server.

The authentication server needed to handle all API requests, making it the server with the highest RPS(Requests Per Second), and it had to be designed to support I/O-intensive workloads.

However, the scrypt algorithm was a computational task that placed a heavy load on the CPU.

Once we aligned on this, we concluded that the scrypt task should be offloaded to a separate server with its own resources.

The plan was for the authentication server to be handled by several replicas with lighter resources, while the CPU-intensive scrypt process would run on fewer servers with larger resources, using threads to manage the workload.

This decision was also influenced by the fact that the authentication server was built with Node.js, on the fact that it is not well-suited for heavy computational tasks.

Now, we had the solution.

The next question was: which language should we use to implement it?

Round 1: Go vs. Rust

We both agreed that since it was a computational task, using a compiled language made the most sense. However, we had different preferences when it came to which language to implement it in.

Negoguma preferred Golang, while I (tales) had been wanting to try Rust. So, we had different opinions on which language to use to implement the scrypt server.

So, we decided:

Negoguma & Tales: "Alright! Let’s each build a server using different languages, and then compare which one performs better!"

The tech stack was straightforward: build a server to handle only the scrypt function and return a response.

The performance tests showed that the Golang server’s response time was 190–200ms, while the Rust server came in slightly faster🎉 at 180–190ms.

Despite Rust’s edge in speed, there was something even I, Tales, could not deny.

In DevOps, teamwork is everything, and when it comes to teamwork, code readability becomes incredibly important.

We decided to go with the Golang server for the sake of consistency in our tech stack. Because Rust’s unfamiliar syntax would make it harder for others to read and handle its maintenance. Moreover, we had several Golang servers already in production, making Golang the more practical choice.

As a result, we reduced the resources for the authentication server, which previously required 1 core and 500MiB, down to 200m and 200MiB. Even with these limited resources, it performed remarkably well.

After a series of twists and turns, the first round of Golang vs. Rust ended with Golang's victory. The scrypt server was separated from the authentication server and successfully deployed into the production environment.

Wait a second, did you say first round?

Round 2: Go vs. Rust

Just when we were sweet dreaming of the scrypt server running smoothly, we discovered that it kept crashing. Each time, the server restarted quietly, like a silent assassin, and the number of restarts gradually increased over time.

In a PANIC, I began investigating, only to be even more shocked at the root cause.

Tales: OMG? OOM?

The scrypt server, being CPU-intensive, had its memory allocated at what we thought was an appropriate level, but for some unknown reason, the working set memory kept increasing, eventually leading to an Out of Memory (OOM) error that caused the server to crash.

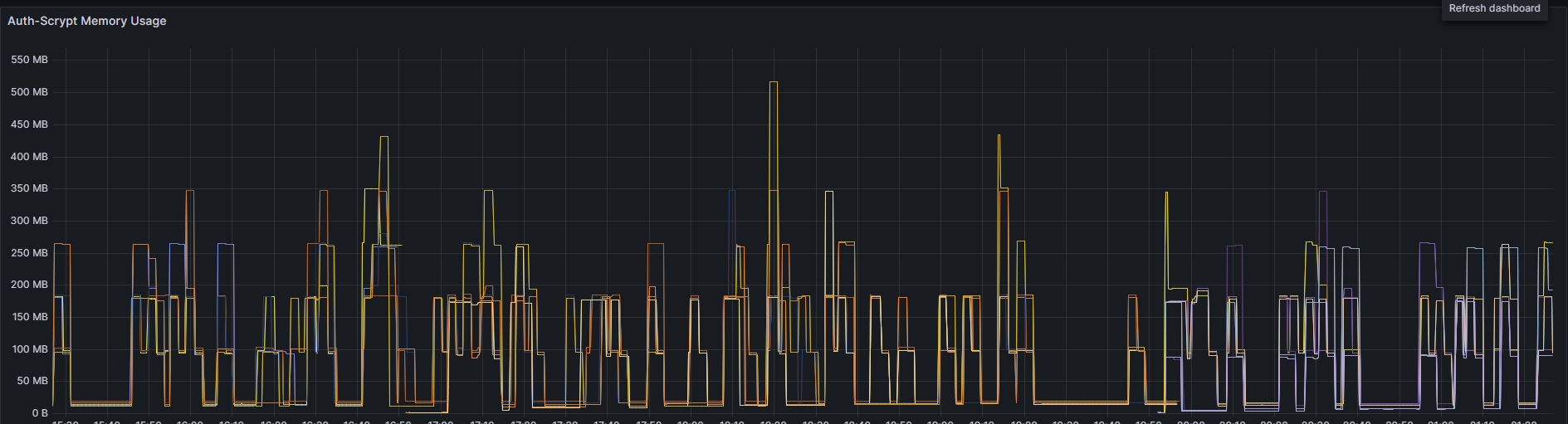

Even after temporarily increasing the memory, the crashes persisted. Monitoring through Grafana; Oh... how I regretted my oversight.

It revealed a classic "apartment-building-shaped" memory graph, indicating that the garbage collector (GC) was not kicking in at the right time....! [8]

At that time, none of us were fully familiar with the detailed configuration of Golang servers.(Now our team are aware of Golang's soft limit settings and how Golang managed CPU allocation.😊)

Realizing this, I figured there must be a setting for the GC, so stayed up late, endlessly searching online for a solution.

With the production environment servers restarting, I could not just go home, fearing a bigger disaster might occur next day.

Seeing I working late, Kururu came over, curious about what I was doing. After explaining the whole situation, Kururu jumped in to help and started brainstorming with me.

Until that point, my face was pale with worry and deeply concerned that a major incident might occur. However, working with Kururu, who has a curious and enthusiastic personality, made me feel like I had a strong ally.

Together, we began hunting for the problem like kids excited by a new challenge.

Kururu wrote a script to reproduce the issue and test whether the problem was resolved.

Meanwhile, I started thinking: 'What if we could control the GC through tweaking the code?' With this approach, I attempted to modify the code so that memory would be stored in the stack rather than the heap.[9]

But it did not solve the issue, so we tried another approach, switching the web framework from Fiber to Gin[10]. Though we tested this new hypothesis, we didn’t expect it to fully resolve the problem. Nonetheless, working with someone like Kururu often led to various insights and new learning opportunities as we discussed the issue.

As we brainstormed possible solutions with various hypothesis, sharing the history of the scrypt server, Kururu suddenly asked a game-changing question:

Kururu: "Do you think the Rust server would have this problem?"

Tales: ...!!

FYI. to be honest, I had completely forgotten about the Rust server up until that point.

The code for it had been left in an old commit. Kururu’s question sparked my curiosity, and I decided to roll back to the old commit and revive the Rust server.

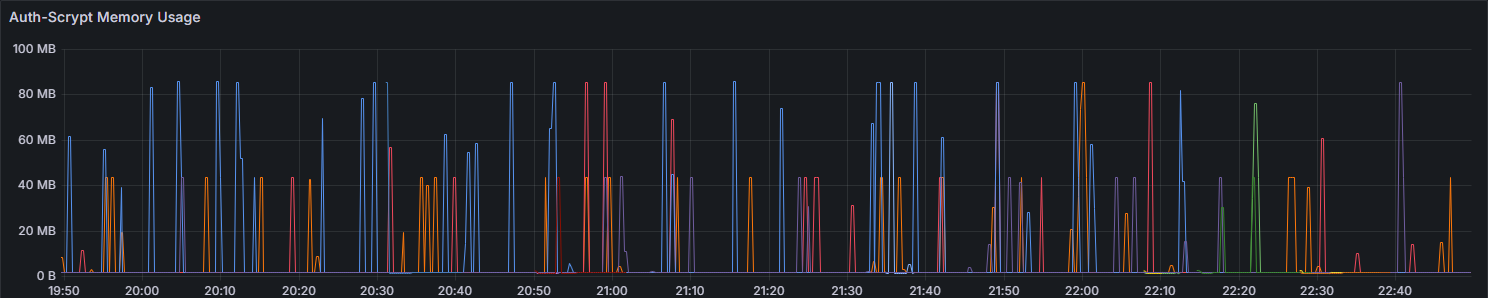

After monitoring it under a real workload, we noticed that Rust’s memory usage graph was completely different; As a result, we could see that the memory usage graph resembled more a needle-like pattern, rather than an apartment-building shape. With memory being released as soon as it was used.

In the end, we rewrote the scrypt server in Rust, and Round 2 concluded with Rust as the clear winner.

Epilogue

#1

At the time, both Kururu and I, Tales, were certain that there must be GC tuning options in Golang. Today, we have applied soft limits to all Golang servers using GOMEMLIMIT [11] and have also implemented CPU control options. [12] However, we could not deny the significant advantage that Rust servers ran smoothly without needing any of these adjustments.

#2

The original scrypt server in Rust, built with Actix, has since been rewritten with Axum[14] to better utilize Tokio[13].

The Axum-based scrypt server now operates with an average latency of 150–170ms, making it faster than before.

#3

This experience taught us invaluable lessons about trial and error when comparing Go and Rust.

But in reality, framing everything as Go vs. Rust can be misleading. What truly matters is selecting the right tool based on the specific needs of the application.

#4

At our company, the tech stack is still around 70% Node.js and 30% Golang, with Rust only being used for that one scrypt server.

It was a valuable experiment, but practicality and team collaboration remain key considerations when choosing the right tool for the job.

Meanwhile, we also leverage Rust logic in certain cases through Node.js’s Wasm[15] to handle specific tasks efficiently.

Acknowledgement

I want to thank everyone involved in this journey!

RiHo, for always prioritizing experimentation and verification, and for being the catalyst that kicked off this story.

Negoguma, for being open to considering different languages and for always engaging in thoughtful technical discussions with me.

and especially Kururu —for making this technical adventure so enjoyable. Despite it not being part of his job, he eagerly jumped into the deep dive just because he found it interesting. Together, we ran tests late into the night, had fun discussing technology, and even after going home, he continued experimenting with Python and Nginx. Thank you for his curiosity, enthusiasm, and invaluable support.

Having great team members made this an exciting and fun journey, where we could exchange ideas, experiment, and enjoy the R&D process.

© 2024 AFI, INC. All Rights Reserved. All Pictures cannot be copied without permission.

References

[1] Rust vs Go in 2024

[2] C# vs Rust vs Go. A performance benchmarking in Kubernetes

[3] phase of test: Setup and Teardown

[4] phase of test: Test lifecycle

[5] why_does_scrypt_run_so_much_slower_than_sha256

[6] go-fiber web framework

[7] rust actix web framework

[8] Identifying Non-Heap Class Leaks

[9] Understanding Allocations in Go

[10] gin web framework

[11] Kubernetes Memory Limits and Go

[12] Kubernetes CPU Limits and Go

[13] asynchronous runtime in rust: tokio

[14] rust axum web framework

[15] WebAssembly - MDN

![[External Essay] Gamescom 2025 Interactions](/content/images/size/w600/2025/09/------1.png)