LLM Theory and Prompt Utilization Strategy Through the Lens of Probability - 1

Written by Devops Traveler Tales, 11 July 2025

The Heart of LLM Utilization: Probability Control

Everyone uses large language models (LLM) these days. LLM-powered chatbots and content creation tools, led by ChatGPT, have long been established as key tools in our daily tasks and projects. However, why do the results vary so much from person to person despite using the same model? While some obtain the desired result from the model immediately, others become frustrated by irrelevant answers or subpar results every time.

What is the problem here—the model's performance? If not, should we feed more data into the model? How about upgrading to a more expensive model?

This article attempts to present an answer to these questions.

LLM is fundamentally a system that generates text based on probability. In other words, all the results you obtain are the outcomes of probabilistic choices. This is why you must be able to control this probability at a precise and meticulous level to use LLMs effectively.

In this article, we provide a comprehensive and in-depth examination of LLMs, encompassing the fundamentals of text generation, the strategies and limitations of prompting, and advanced techniques that facilitate stronger reasoning. Rather than just learning how to craft better prompts, you will develop a precise understanding of the probabilistic mechanisms that drive word selection inside the model and learn how to control them. We will also explore advanced prompting strategies such as Chain-of-Thought (CoT) and Tree-of-Thought (ToT), which can help you guide the model toward more accurate outputs when solving complex problems.

Finally, we will examine the structural vulnerabilities that stem from the inherently probabilistic nature of LLM text generation and discuss what we can do to avoid the mistakes that result from them.

Fundamentals of Text Generation in LLM

The first step to using LLMs effectively requires you to understand the principles of how LLMs generate text. As often mentioned on YouTube videos and blogs, LLM is a program that predicts the next word (token) that most appropriately follows the given text.

The core principle behind how an LLM predicts the next word is fundamentally based on a statistical approach. In other words, by training on massive amounts of text data, the model learns how often certain words tend to follow others, creating a "probability dictionary." For example, after the word "apple," words like "tree" or "pear" are likely to appear frequently, while a word like "cloudy" is extremely rare. Having learned these statistical patterns, the LLM internally asks itself, "What word would fit best next?" based on the user's input, and then calculates the probability for each possible candidate word.

Internally, this process undergoes the following steps:

1. Context-Based Scoring

The LLM generates a list of all possible candidate words that could follow the input sentence and assigns each one a numerical score reflecting how naturally it fits the given context.

2. Conversion Into Probability Distribution

The scores assigned in this way are adjusted so that their total sums to 100%, creating a probability distribution for each candidate to be selected. For example, after "Today's meeting," phrases such as "will take place," "has been canceled," and "has been prepared" receive high probabilities, while less natural candidates like "is okay" and "is difficult" are assigned to lower probabilities.

3. Ensuring Diversity Using Top-k and Top-p Sampling

If the model always picks the candidate with the highest probability, the results tend to become monotonous. To introduce diversity, LLMs typically use one of two common methods:

- Top-k Sampling: The model keeps only the top "k" candidates with the highest probabilities, then selects one at random from that group to introduce natural variation.

- Top-p Sampling: The model ranks candidates by probability and includes them in a pool until their combined probability reaches a certain threshold (p%, typically 90%). It then randomly selects from this pool, allowing for more unexpected and creative outputs.

4. Using "Temperature" to Adjust Creativity and Stability

Lastly, a parameter called "temperature" controls the balance between consistency and creativity.

- At a low temperature (e.g., 0.2), the model focuses more on high-probability candidates, producing more stable and predictable outputs.

- At a high temperature (e.g., 0.8), even lower-probability candidates have a chance of being selected, resulting in more creative and diverse outcomes.

In summary, the overall process LLMs follow to generate text can be outlined as:

『Score candidates based on context → Convert scores into a probability distribution → Narrow the candidate pool using Top-k or Top-p sampling → Adjust output diversity with temperature』

On the surface, selecting the next word may seem like a simple task, but behind the scenes lies a complex "probability dictionary" powered by billions of parameters.

From a Massive Probability Dictionary to a True Conversational AI

At its core, an LLM can be seen as a massive probability dictionary designed to predict the most appropriate next word. In reality, it doesn't store probabilities directly. Instead, it houses a language prediction engine comprising billions of parameters. This engine calculates, in real time, the probabilities of possible following words based on the input sentence and selects the one that fits most naturally.

This prediction mechanism alone is enough to create a natural sentence. However, "context signals" are crucial for an LLM to move beyond functioning as an auto-completion device and evolve into a true conversational AI. What's interesting is that you can create context signals through simple "framing" instead of complex algorithms.

Q/A Framing: Creating Conversation with Minimal Structure

In early experiments with GPT-3, researchers discovered something surprising: by simply adding the labels "Question:" and "Answer:" around an input sentence, the LLM moved beyond functioning as a basic autocomplete engine and began behaving like a question-answering interface.

Answer:

As in the example above, just placing "Question:" before the input and "Answer:" before the model's reply immediately gives the model a clear signal—it understands that it is now expected to respond to the question. As a result, the model begins explaining the meeting naturally. This is a powerful and effective way to prompt conversational behavior, even in a zero-shot (no example answers) setting without any additional examples or further training.

Role Message Structure of InstructGPT: Embodying a Persona

The question-answer framing proved effective in transforming the LLM into a conversational interface; however, it had limitations when it came to controlling the model's tone or maintaining consistent behavior. In cases where the goal was not just to answer questions but to respond with a particular personality or attitude, a more advanced strategy was needed.

To address this, OpenAI introduced InstructGPT in 2022, which adopted a structure that assigns a "role" to each message. This role-based format is defined in a JSON structure, as follows:

{ "role": "system", "content": "You are an assistant aimed at providing kind and accurate information." },

{ "role": "user","content": "Hi. How's the weather today?" },

{ "role": "assistant", "content": null }

]

The elements of this structure have clear purposes, as outlined in the following:

1. system

This stage defines the overall nature and guidelines of the conversation. By setting behavioral directives—such as "be kind and accurate" or "explain concisely and technically"—the model is guided to maintain a consistent persona in its responses.

2. user

This is where the user's actual query or request is presented. Natural, conversational language can be used freely at this stage.

3. assistant

Here, the LLM generates its response. It draws upon both the guidelines set in the system message and the user's input to craft its reply.

This role-message structure enables LLMs to go beyond simple information delivery, allowing them to evolve into conversational AIs with a consistent tone and a clearly defined character that meets user expectations.

Interactive Prompt's Branch Management Strategy

The framing strategies and role message structure we've discussed so far help keep an LLM's responses within a relatively consistent framework. However, because language generation is inherently probabilistic, even a slight change in input can cause the output to diverge significantly. The greater the number of candidate words, the higher the chance the model will produce a response that strays from the user's original intent—an instance of high uncertainty, or entropy, in information theory.

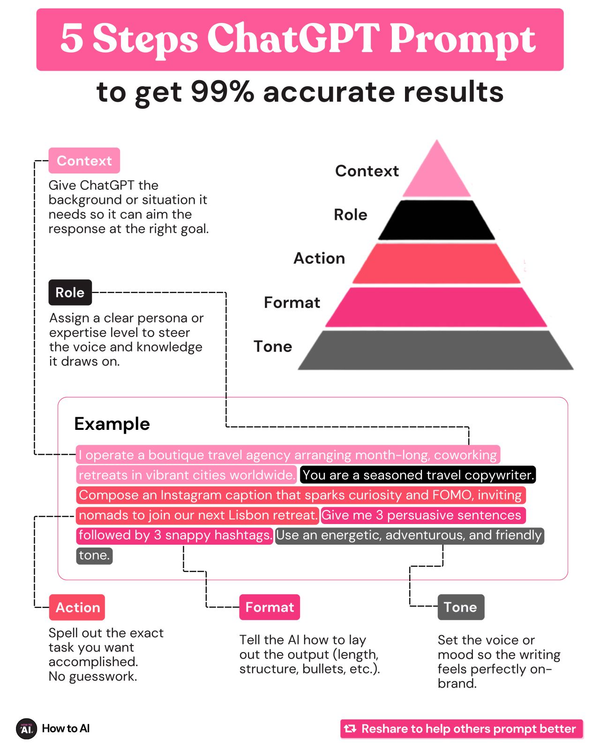

In such cases, prompt design becomes the most effective form of intervention. The prompts we'll explore next expand on examples featured in a YouTube video by Dr. Kang Su-jin.

Pay particular attention to how prompts can constrain unnecessary probabilities in the output, helping guide the model to produce more consistent and targeted results.

All the prompts mentioned in this chapter are taken from Dr. Kang Su-jin's video, with additional explanations added.

1) Spatial Structuring: Limit Responses With a Data Schema

Instead of allowing the model to generate answers with complete freedom, prompts can be designed to keep the model's output confined to a predefined data structure. For example, when asking the model to write a function for an essay about a future city in the year 2150, you can provide a clearly defined structure in JSON format, as shown below:

This is a function for writing an essay about a future city in 2150. Provide explanations for each section and sub-item.

```

#Data Structure

data = {

"CityOverview": {

"Population": "Description",

"Location": "Description",

"Key Features": ["Feature1","Feature2","Feature3"]

}

}

By clearly defining the data structure within the prompt, the model is guided to focus solely on the specified fields and populate them accordingly. This approach effectively prevents the model from veering off in unexpected directions and ensures stable results within the intended scope.

2) Separation of Fact and Opinion: Clearly Separate Output Fields to Reduce Uncertainty

When the model indiscriminately generates "facts" and "opinions" together in a single response, the two areas can become entangled, increasing the overall uncertainty (entropy) of the result. To prevent this, you can provide prompts that clearly define separate output categories, such as facts, opinions, speculations, and uncertainties.

Then, organize your response using the format below:

- Fact: { }

- Your Opinion: { }

- Speculation: { }

- Uncertainties: { }

- Topic:

Using this prompt, the model clearly separates its response into four distinct sections, selectively filling in each with only the relevant information. As a result, facts and opinions are evidently separated, improving both the clarity and quality of the response.

Ultimately, by choosing to use only the factual section, all of the model's generative capabilities remain accessible, all the while allowing the user to rely solely on verified information.

3) Answer Simplification: Reduce Branching by Simplifying Response Format

Sometimes, a simple instruction like "keep your answer short" can be a surprisingly effective prompt strategy. For example, if the model is restricted to responding only with a simple "yes" or "no," it avoids the need to construct complex sentences and stays within a narrow set of options.

If you know: yes

If you don't know: no

* Do not explain.

By clearly limiting the response format in this way, you eliminate opportunities for the model to consider unnecessary variations, thereby significantly reducing the uncertainty (entropy) of the output. As a result, you can achieve highly consistent and unambiguous short-form answers.

4) Structure "Following": Restrict Response Scope Using Clear Guidelines

You can provide a clear, specific content creation format to the model to minimize instances where the response diverges in unexpected directions. For example, when requesting an article on immigration policy, you can clearly present a top-down structure and additional information items, as shown in the following:

- Complete the content by strictly following the structure below.

[Top]

Neutral and fact-based explanation about immigration policy

[Sub]

- Use neutral language

- Unbiased expressions

- Exclude emotional expressions

- Exclude value-judgment expressions

- Information based on facts

[Include Additional Information]

- Key components of immigration policies

- Purpose and function of immigration policies

- Statistical data on how immigration policies impact the national economy, society, and culture

By providing a clear structure of [main topic → detailed guidelines → additional information] in the prompt, the model generates only the intended content without deviating from the set framework. As a result, the consistency and accuracy of the output are greatly improved.

5) Compound Prompts for Software Services: Control Responses by Combining How-to, Examples, and Response Rules

Real-world software services often use compound prompts that combine three core components: clear instructions (How-to), specific examples (Examples), and response rules (Response Rules). This approach effectively narrows the model's output, helping to maintain a consistent format and tone.

Below is a real-life use case.

1) Categorize the user intent into three types

2) Generate response with an emotional touch for each type

[Examples]

Case 1: Neutral response

- User: Summarize the ESTJ personality in 5 lines.

- Model: ESTJ is the "Practical Manager" type.

Case 2: Satisfied response

...

[Response Rules]

1. Respond in Korean

2. Sentence length within 20 characters

3. Do not answer the original question directly; make indirect suggestions

4. Use of emojis is allowed

A prompt configured this way features the following:

- How-to: Clearly defines how the model should construct its response and limits potential branching paths.

- Examples: Serves as a concrete guide by illustrating the desired output format in advance.

- Response Rules: Narrows the expressive range of the final output, helping the model maintain consistency in both style and structure.

As a result, the model operates strictly within predefined guidelines, significantly improving the consistency and quality of its responses.

The five approaches we've explored so far—spatial structuring, separating facts from opinions, simplifying responses, structure following, and compound prompts for software services—are all strategies designed to reduce unnecessary branching by limiting the model's probabilistic output space in advance. This minimizes the entropy of responses, enabling stable and reliable outputs in the desired format and tone.

However, we can take it one step further. Beyond simply narrowing the model's output, there are prompt strategies that guide the model to perform deeper reasoning and logical inference. In the next chapter, we'll explore two such methods in detail: Chain-of-Thought (CoT) and Tree-of-Thought (ToT) prompting, which are techniques for enabling LLMs to generate more complex and structured lines of thought.

© 2025 AFI, INC. All Rights Reserved. All Pictures cannot be copied without permission.

![[External Essay] Gamescom 2025 Interactions](/content/images/size/w600/2025/09/------1.png)