Use Strategic A/B Testing to Drive Growth : For limited user base B2B Product

The Challenge of B2B Product Testing

In today's fast-paced business landscape, A/B testing is often highlighted as a key strategy for growth and success. However, most of the available resources tend to focus on B2C (business-to-customer) products. Why is that?

There are several reasons why B2B (business-to-business) products are more challenging to do A/B testing:

- B2B companies generally have a smaller customer base compared to B2C, making it difficult to gather a large enough sample size for experiments.

- B2B businesses often prioritize fulfilling promises to specific customers over identifying broader trends among a wider audience. This is especially true when the business model is contract-based, adding another layer of complexity.

Why you should still experiment - because it's B2B!

Paradoxically, in the B2B space, the makers of the product are often not the end users. This creates a situation where we may be developing products without fully grasping the customers' needs. A/B testing becomes the key to bridging this gap.

However, it's important not to test for its own sake.

Designing, implementing, and analyzing A/B testing require significant resources. Deciding to do it also means choosing not to pursue other activities with those same resources. It's important to consider opportunity costs carefully.

Effective testing requires strong leadership willing to invest in the process, a technical environment that supports test design and analysis, and hypotheses that are worth testing.

- A desire to conduct A/B testing.

- The necessary environment to design, run, and analyze the testing.

- A hypothesis that you believe is worth testing.

BACKND has a Team for Testing: Focus on Onboarding

To understand how testing works "behind the scenes" at BACKND, it's important to consider the overall structure of our research and development team.

The R&D team is divided into two main groups: one focused on improving the product through customer interviews, and the other focused on improving the product through testing.

An interview-driven team identifies customer needs primarily through customer interviews and requests received directly from sales meetings and customer inquiries, and delivers a product on a set date after a lengthy QA process. The goal of this team is to reliably deliver features that the target audience wants.

On the other hand, the testing team is primarily using A/B testing to analyze the behavior of the customers and to improve the product at a fast pace. Their goal is to improve the onboarding process to ensure a seamless experience for a wide range of users.

Why would a testing team focus on the onboarding process, given the many conversion steps in the long user lifecycle? There are three main reasons:

- Early in the funnel, it’s easier to obtain a statistically significant sample size for testing.

- As the number of new customers grew, it became impossible to manage onboarding issues through "meetings" alone. An automated onboarding process became necessary to smoothly integrate many users.

- By minimizing the impact on end users (in BACKND’s case, game players), testing can be conducted without compromising the stability of the service.

Let's explore how the A/B testing team improves the customer onboarding experience.

How to improve onboarding experience

1. Skipping the test

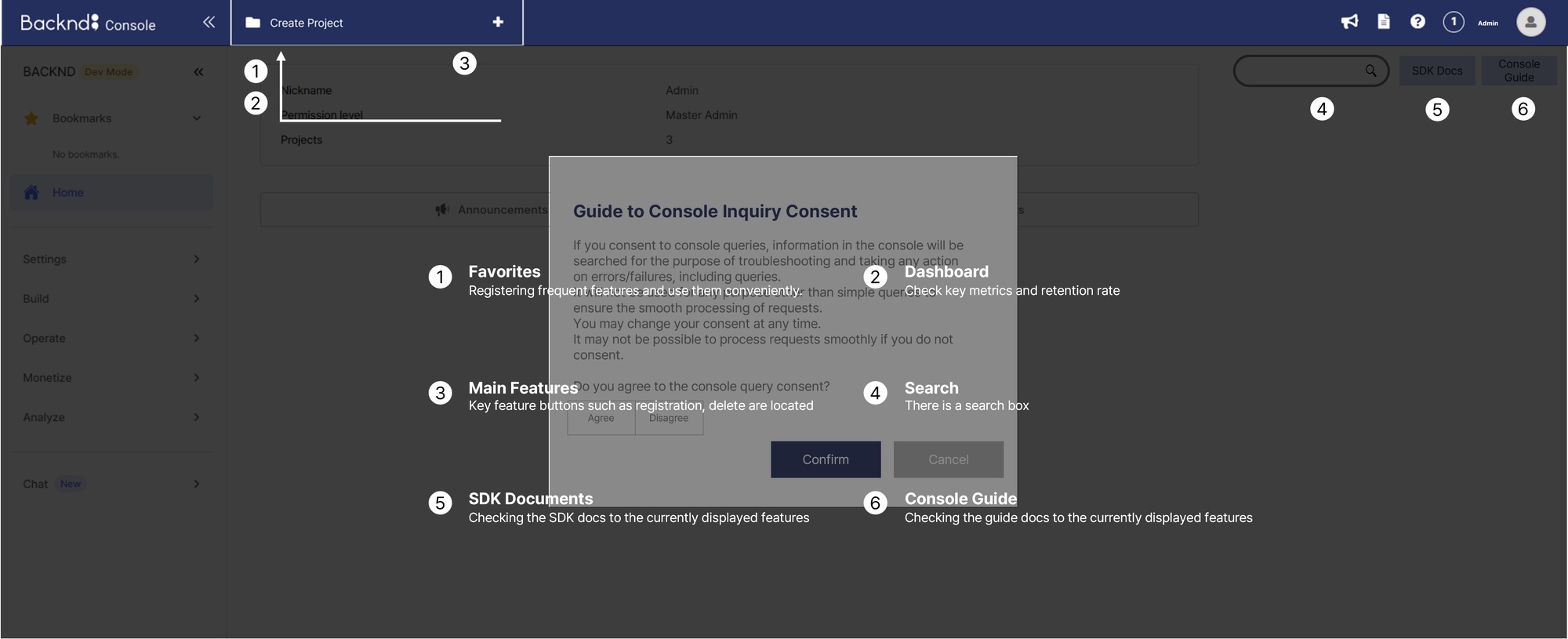

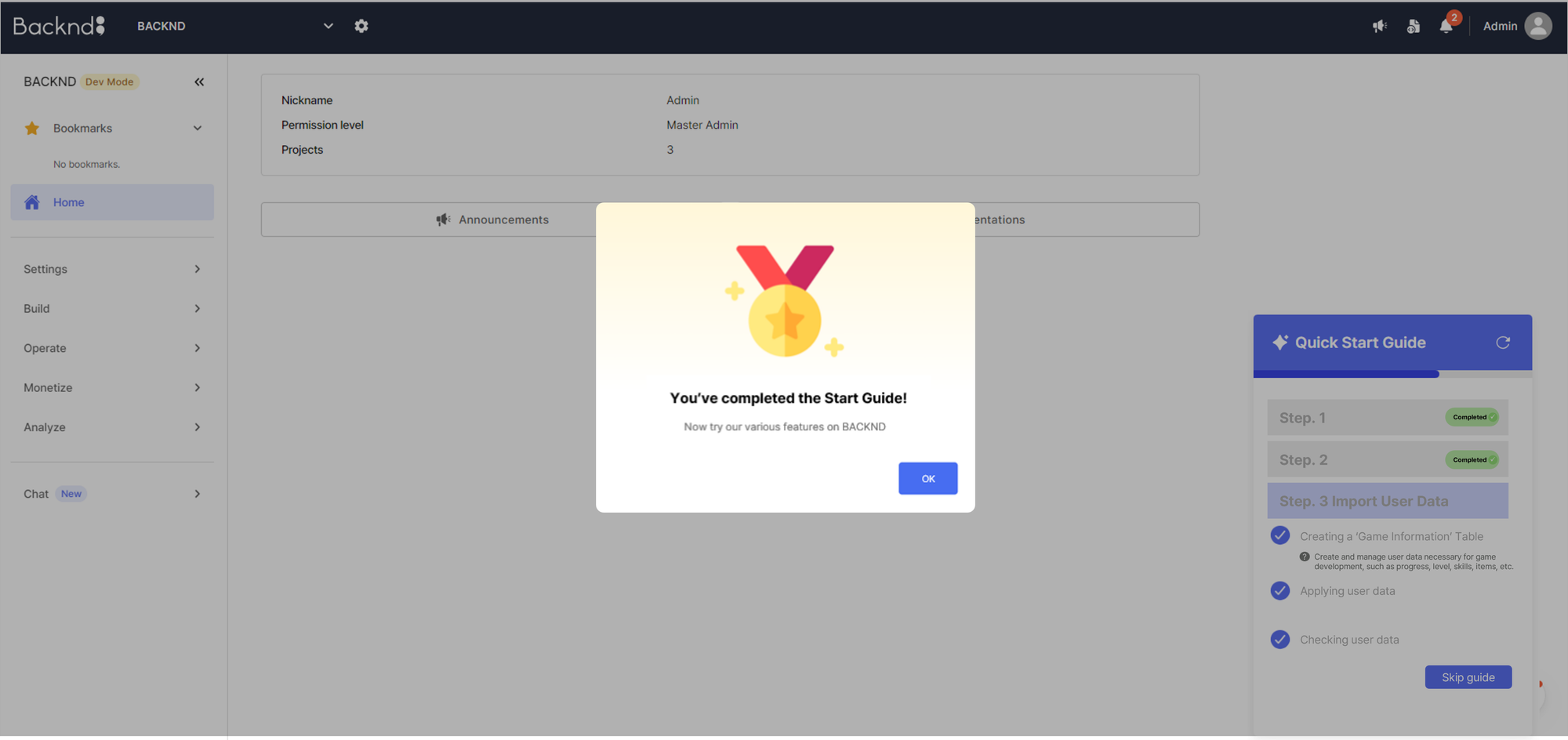

For a long time, improving the onboarding experience wasn’t a priority. We didn’t put much effort into it, resulting in a cluttered first screen filled with overlapping modals.

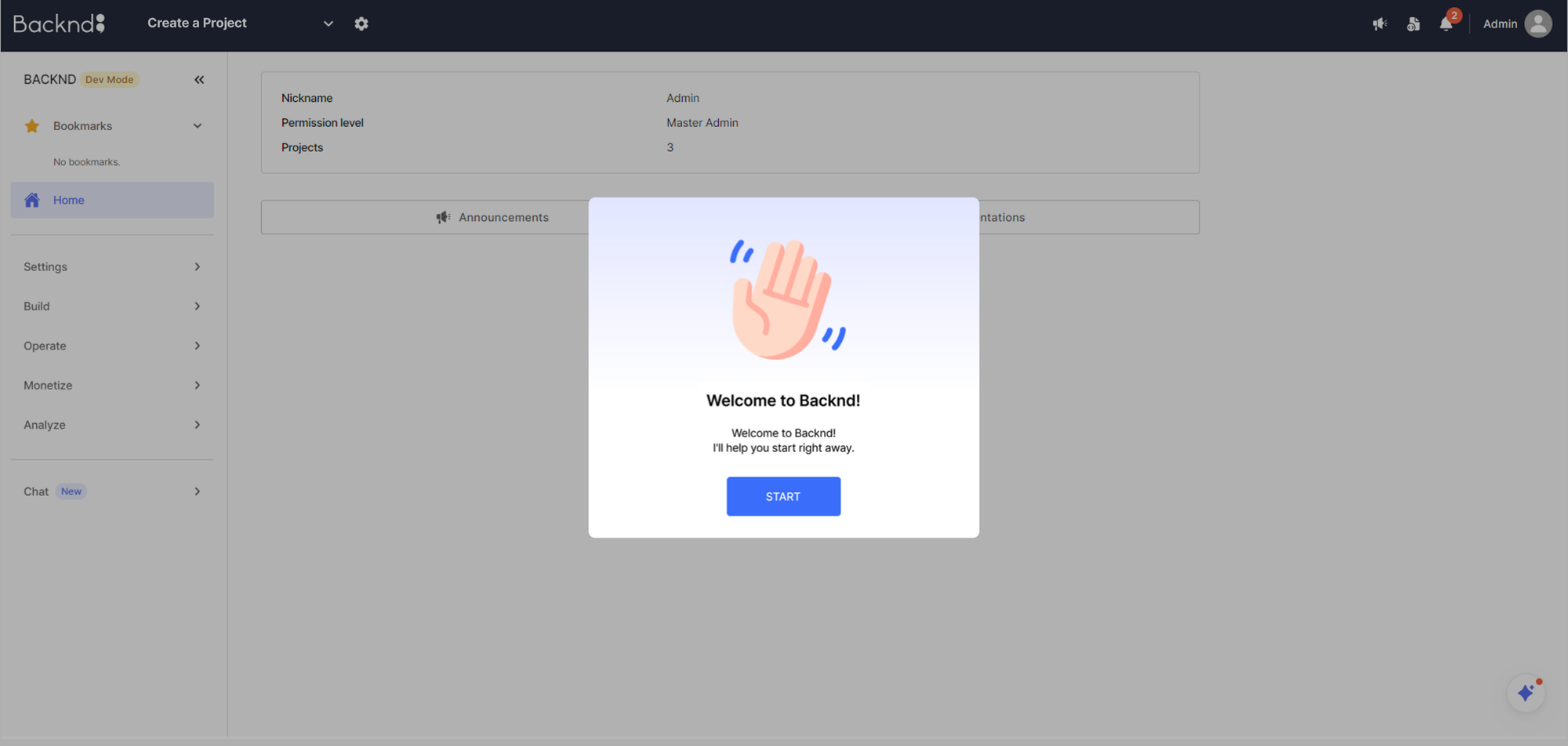

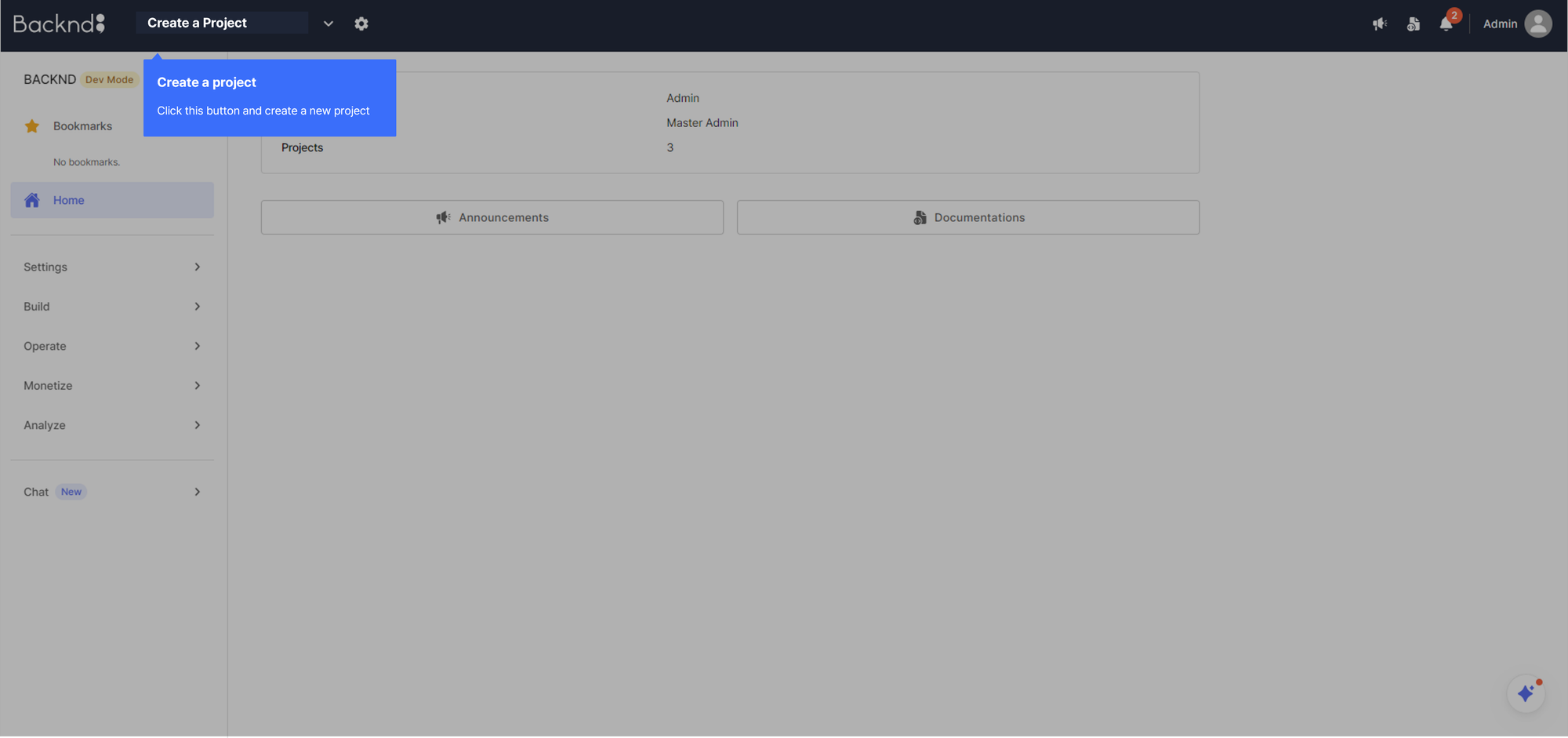

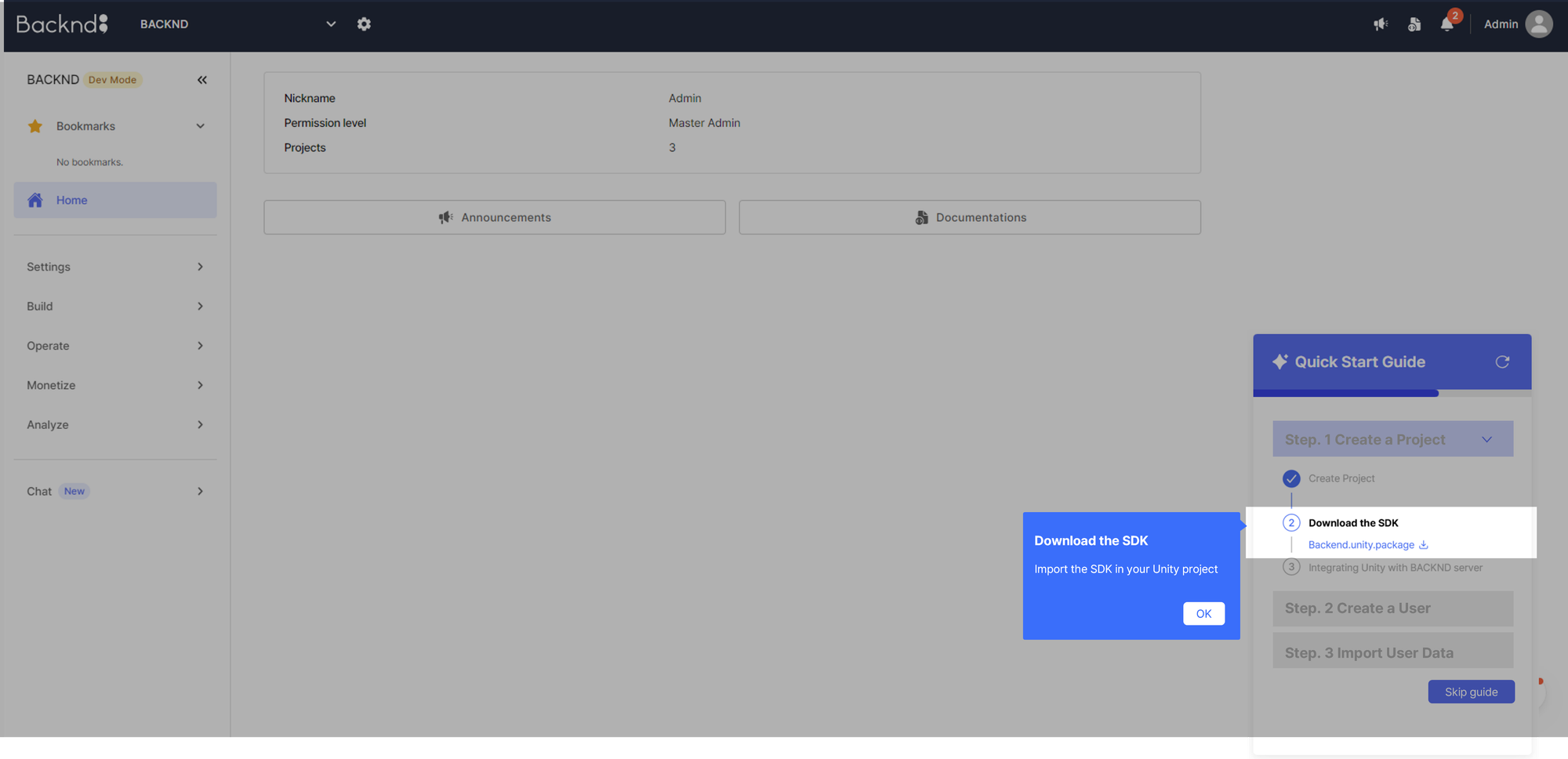

The team was convinced that this setup was not user-friendly. Instead of running an experiment, we opted for a straightforward update. The UX was redesigned to deliver information sequentially and in a more friendly tone, similar to a face-to-face meeting.

This helped increase project creation by 13.5% and SDK integration by 20.7%!! However, this improvement cannot be attributed solely to the design change because we did not A/B testing in an environment where all other variables were controlled. Nonetheless, from a company-wide perspective, we made the optimal choice by using our limited resources to achieve much more than just improved onboarding.

2. Finally testing!

The team began A/B testing with the solid foundation. Eventually, "hypothetical" ideas started to emerge—ideas that no one could confirm without validation through A/B testing.

Would removing certain steps from the start guide improve the user experience?

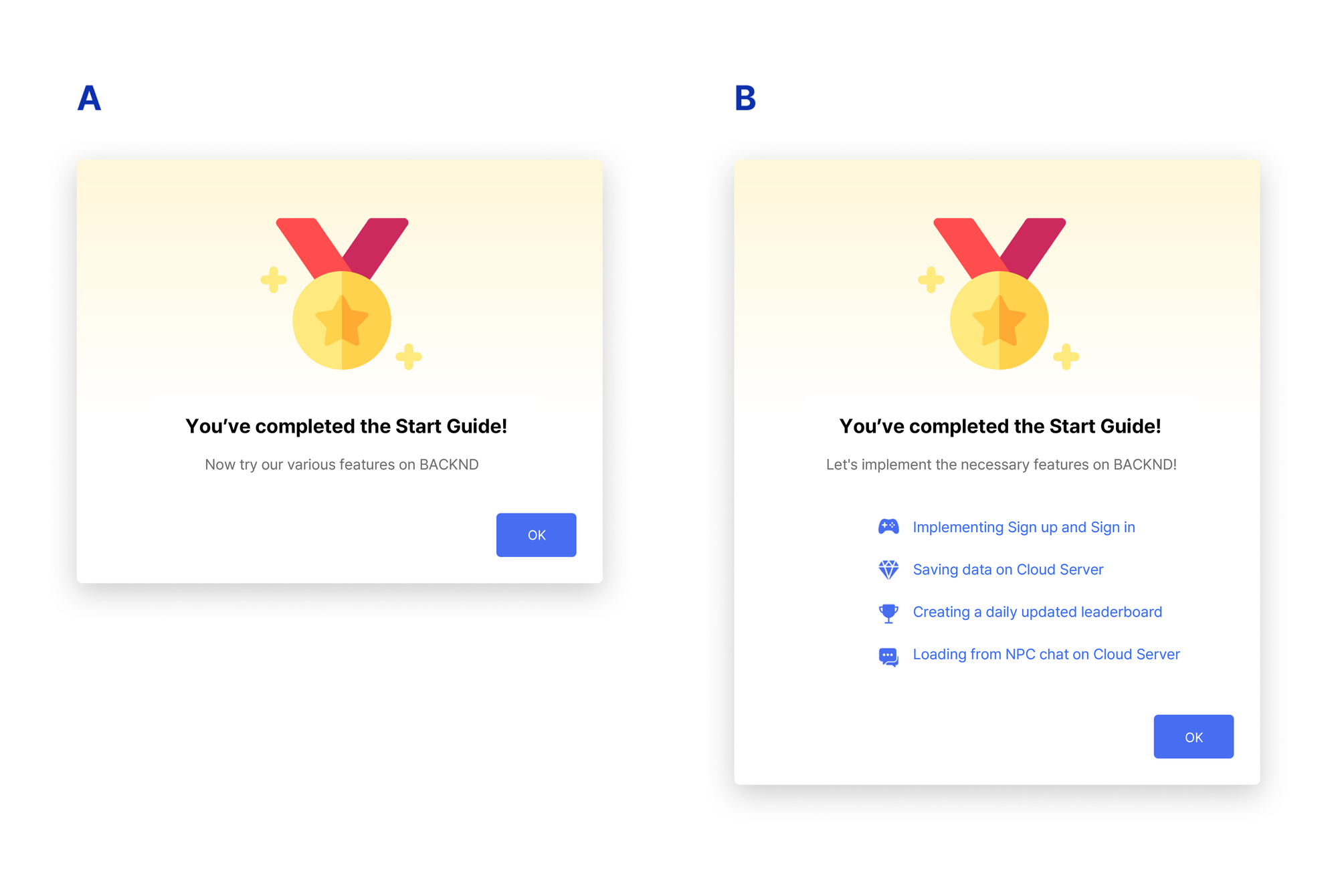

Would providing audio-visual aids after the start guide enhance feature learning?

Would using terms familiar to game developers, rather than predefined service terms, lead to better understanding?

These hypotheses were tested and validated through A/B testing, allowing the team to make informed improvements. As a result of these tests, we’ve taken a solid step forward in improving our product, and two standout experiments highlight this progress.

Reaching Customers in Their Language: A Successful Experiment

New users of BACKND often struggled to match their needs with the features our platform offered. This mismatch led to frustration and increased the likelihood of user churn, even after SDK integration.

We hypothesized that we could solve this problem by providing a how-to document using "customer language." The document provided explanations that customers could intuitively understand, resulting in a 15% increase in active use of the SDK. This experiment showed us how important it is to think like a customer instead of a service provider.

However, not all of the tests yielded the results that we expected. Next, I'm going to share an experiment where the hypothesis was tested, but didn't turn out as we anticipated.

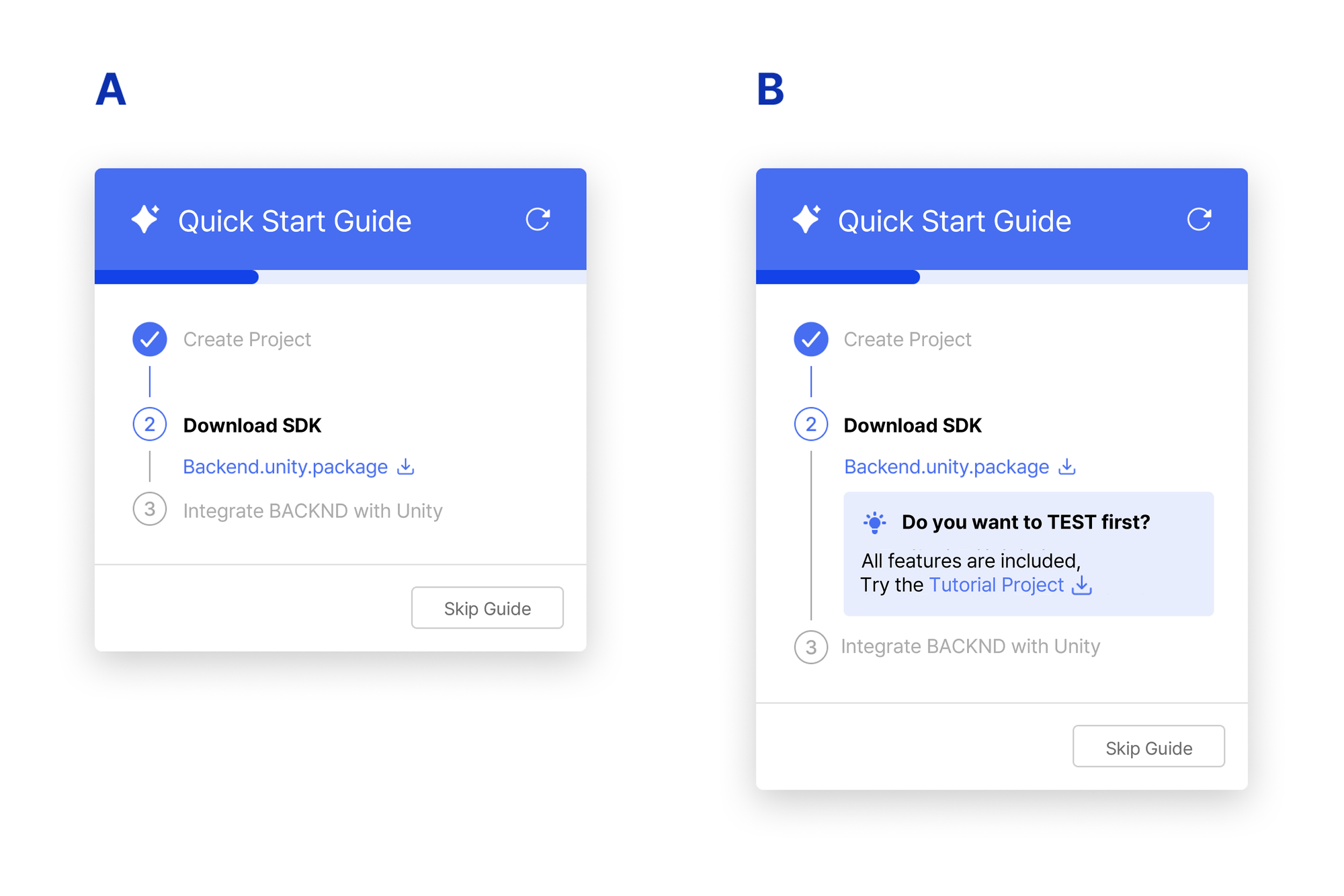

Providing sample project: An unexpected result

This is the experiment disproved a hypothesis: "Users who are undecided about whether they want to use BACKND or not will want to test features before implementing them." The experiment was to give Group A the SDK (Software Development Kit), and give Group B the SDK and an example project with all the features already implemented. The sample project was designed to allow developers to quickly try different features. Our expectation was that this would increase the use of our service among those who had signed up for testing purposes.

Contrary to what we expected, Group B's conversion rate was 16% lower than Group A's. This outcome demonstrated that either my hypothesis was wrong, or at least that I didn't have the right experimental design to test my hypothesis. By looking at the contextual data, I was able to determine that I didn't have the right design to test my hypothesis, and I was able to prepare a more refined experiment.

Experiments like this that don't turn out as expected are important in their own right. By analyzing why, we can gain new insights that we hadn't seen before, and have a clearer idea of how to improve the product. In that sense, finding out that your hypothesis is wrong is not a failure, but rather an important data point that can lead you to a better direction.

To conclude, let me say: A/B Testing is a powerful tool for B2B growth

Even though BACKND is a B2B product where stability is essential, the team recognized the need for A/B testing to address blind spots in understanding customer churn. Even small changes to first impressions can significantly impact conversion rates, making testing highly effective.

While B2C and B2B serve different customers, they share the goal of overcoming misperceptions to make better decisions and deliver more value to grow. And A/B testing is a powerful tool for validating intuitions. That's why it's important not to avoid experiment-based data analysis due to a small user base. Instead, approach testing design thoughtfully and with the limited user base in mind. This will help to identify the real needs of the customers and optimize the product.

For those facing similar challenges, I hope this article provides valuable insights.❤️

Sources

- Image source : Free illustrations from Streamline

© 2024 AFI, INC. All Rights Reserved. All Pictures cannot be copied without permission.

![[External Essay] Gamescom 2025 Interactions](/content/images/size/w600/2025/09/------1.png)